Netra Radhakrishnan's Portfolio

Turning ideas into meaningful experiences

AI Based Evaluator

Introduction

Smart Evaluator is an AI-powered tool designed to simplify and enhance the evaluation process for evaluators by providing grades and customized comments for each evaluation. It automates assessments, ensures fairness, and delivers consistent, insightful feedback across diverse use cases. With its intuitive design and AI-driven capabilities, Smart Evaluator optimizes workflows and empowers evaluators with reliable results.

My Role

I designed a seamless and intuitive user interface tailored to the needs of evaluators, researching industry standards and identifying optimal interface elements to enhance usability and efficiency. Collaborating with the UI team, managers, and stakeholders, I ensured the design aligned with project goals. Additionally, I crafted and refined the AI’s evaluation logic by developing and testing prompts to deliver accurate assessments and meaningful feedback. This required a strong understanding of both user needs and AI capabilities to optimize the evaluation process for consistency and reliability.

The Process

Research

During my research phase, I conducted a comprehensive analysis of existing educational assessment tools in the market and user research.

Currently, the educational assessment space is dominated by two types of players: established platforms offering comprehensive grading solutions (like Gradescope and Crowdmark) and newer AI-powered tools (such as MagicSchool and TestMarkr) that focus on automating the feedback process.

- Most successful platforms prioritize collaborative grading capabilities

- AI integration is becoming standard, particularly for feedback generation

- Analytics and performance tracking are essential features

- Support for both digital and paper-based assessments is common

Pain Points:

- Time-consuming individual feedback process

- Inconsistency in grading across multiple evaluators

- Difficulty in tracking class-wide performance trends

- Need for reusable feedback templates

Opportunities Identified

- Most existing solutions lack seamless integration between different assessment types

- There’s limited support for real-time collaboration between teachers

- Few platforms offer comprehensive behavioral analysis tools

- The market lacks intuitive, user-friendly interfaces for complex grading tasks

User Research

Methodology

- In-depth interviews with recruitment platform evaluators

- Analysis of their current grading workflows

- Review of existing rubrics and evaluation criteria

Key Pain Points

- Inconsistent Evaluation

Despite having predefined rubrics, evaluators struggled to maintain objectivity and consistency when grading open-ended responses across all candidates. - Scoring Standardization

Each evaluator might interpret the same answer differently, leading to potential disparities in scoring between candidates with similar responses. - Time Management

Thoroughly reviewing each response while maintaining fairness and attention to detail proved time-consuming, especially with large numbers of candidates.

This research emphasized the need for a solution that streamlines the evaluation process, ensuring fairness and consistency across all candidate assessments. It informed my design decisions by underscoring the importance of combining the robustness of traditional grading tools with the efficiency of AI-powered automation, while prioritizing user experience and accessibility.

Designs

After thorough market research and user interviews, I focused on creating a solution that would address the core challenges faced by evaluators while maintaining simplicity and efficiency in the interface.

The design approach prioritizes consistency in evaluation while reducing cognitive load for evaluators. Each element was carefully considered to support quick decision-making without compromising assessment quality.

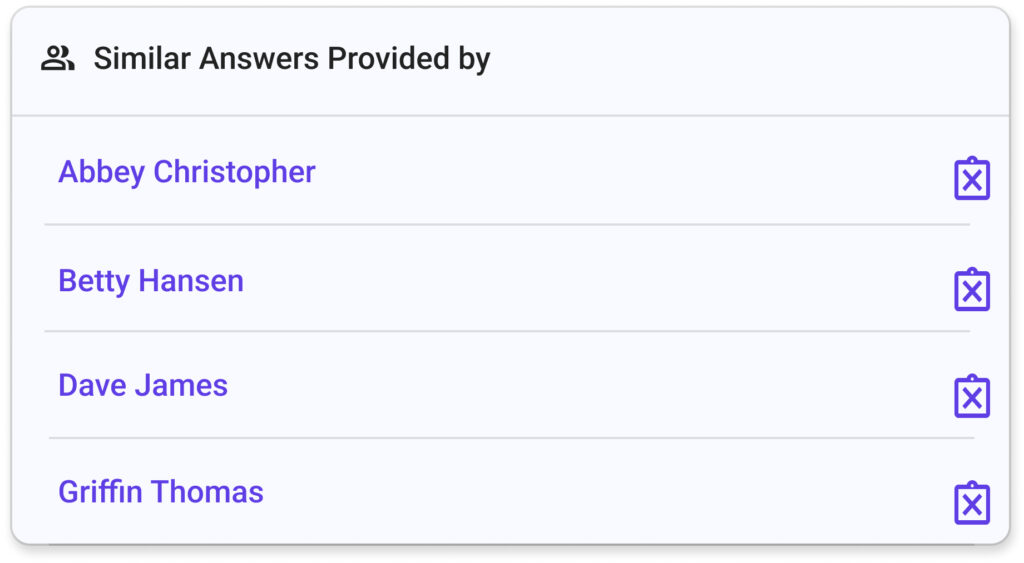

I designed an intelligent interface that automatically groups similar candidate responses. This allows evaluators to assess similar answers together, ensuring consistent scoring and significantly reducing evaluation time.

In the above image, different students who answered the same question are grouped together. On the event of triggering a plagiarism check or on encountering suspiciously similar answers, there will be a button to mark this question as “Copied” for each candidate. On selecting that button a warning pop up is triggered.

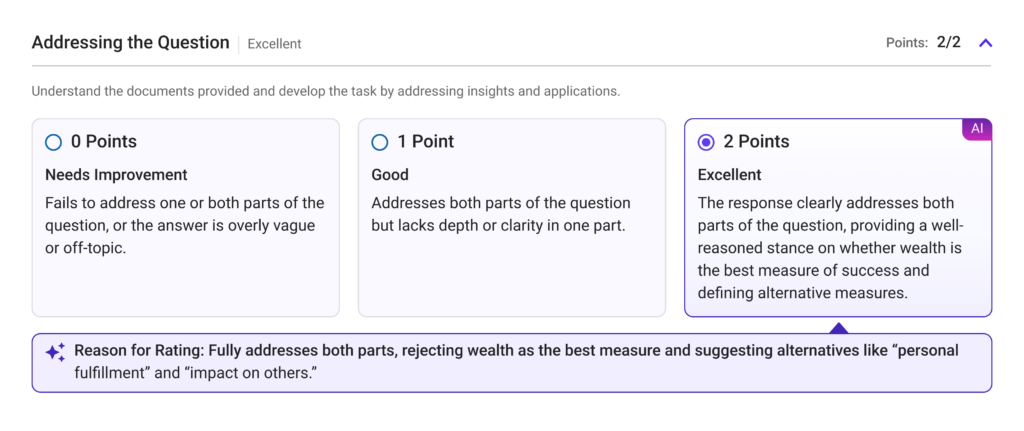

The rubric panel (as shown below) was designed to be both informative and interactive. Evaluators can quickly apply scoring criteria while maintaining a clear view of the candidate’s response. The system provides real-time guidance to ensure scoring consistency.

In the above image, a part of a rubric that has been evaluated using the given criteria (Addressing the question) and ratings (Needs improvement, Good, Excellent). The answer is not only evaluated based on the rubric a ‘Reason for Rating’ is provided for the rating selected for every criteria. Each score provided can also be manually updated by the evaluator.

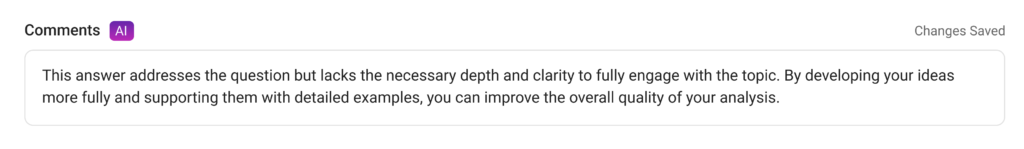

A general comment (as shown above) was provided based on the overall performance of the student in the question is provided. The tone and style of the comment provided could be customised to a second person narrative where the feedback is provided to a student directly or could also be in a third person narrative for reference of any other evaluator.

Additional Features included:

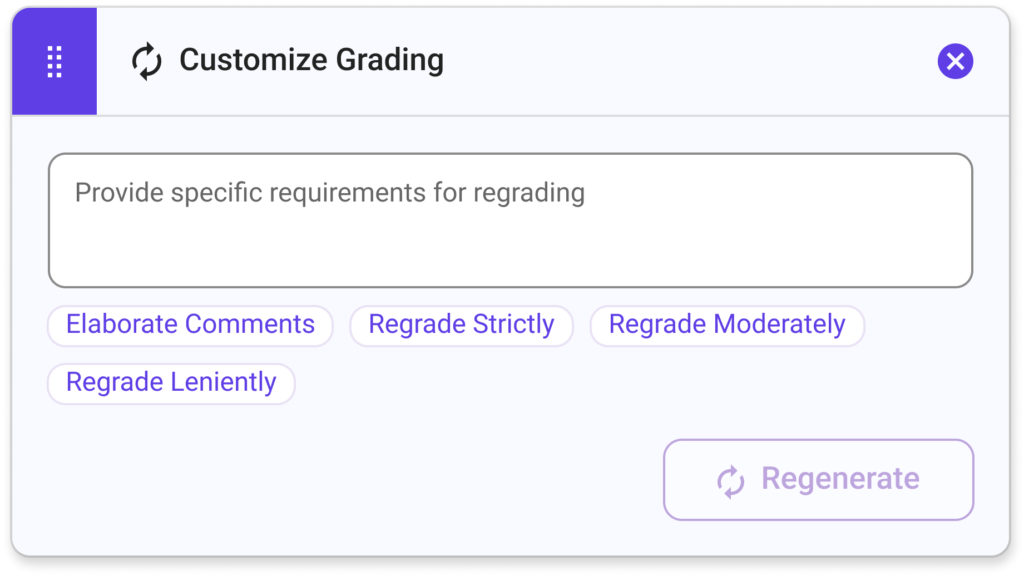

The ability to customise the evaluation provided:

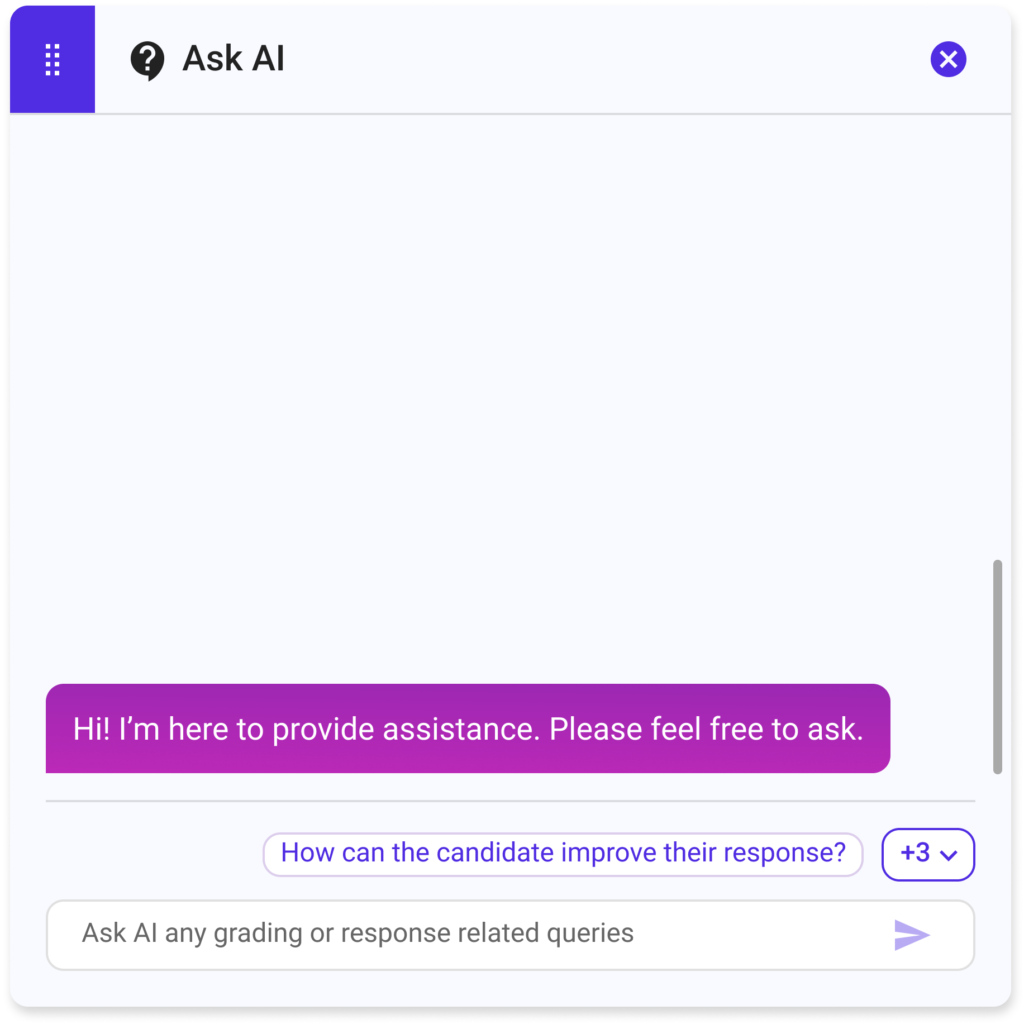

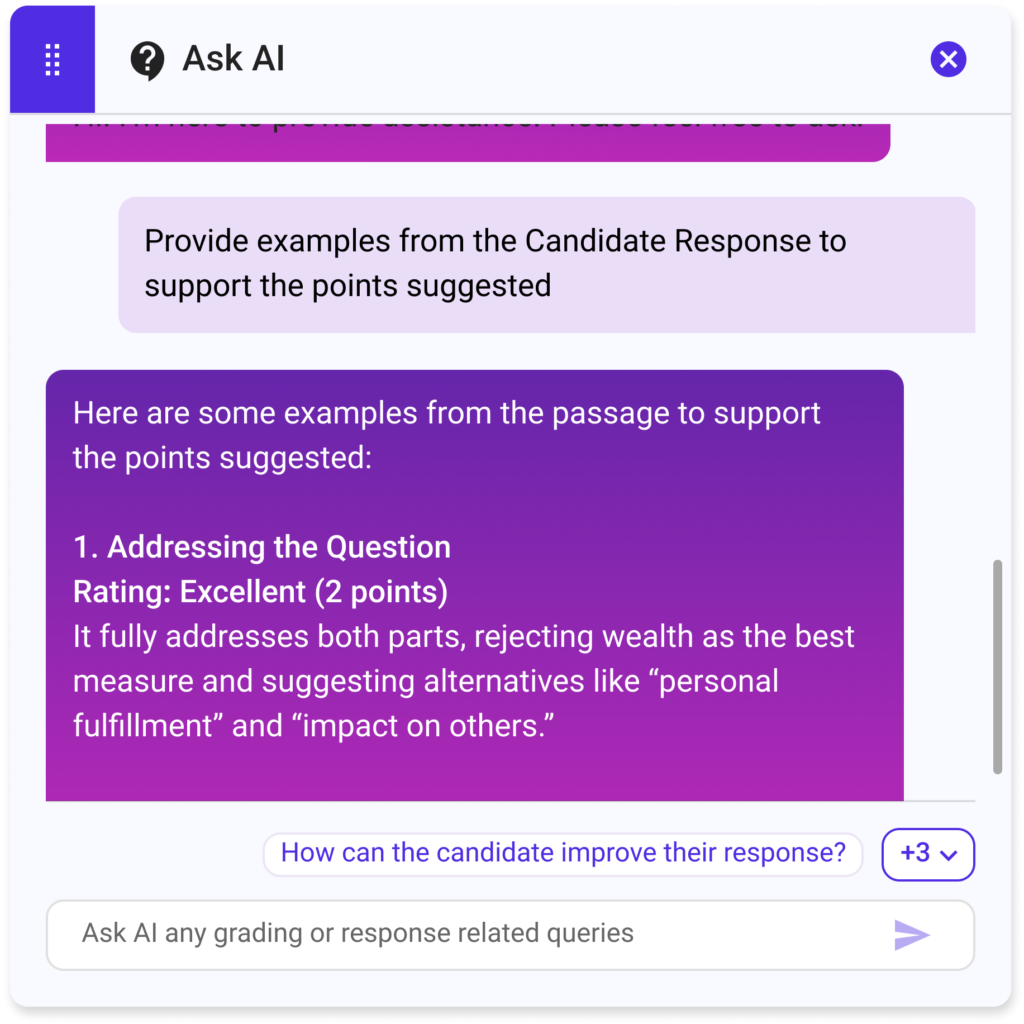

Ability to ask questions to AI regarding the provided Evaluation with suggestions of questions to ask the AI:

The interface was optimized for speed and accuracy:

- Keyboard shortcuts for common actions

- Batch operations for similar responses

- Auto-save functionality

Accessibility Considerations

- High contrast color schemes

- Clear visual hierarchy

- Responsive design for various screen sizes (in progress)

Error Prevention

Special attention was paid to preventing common evaluation mistakes through:

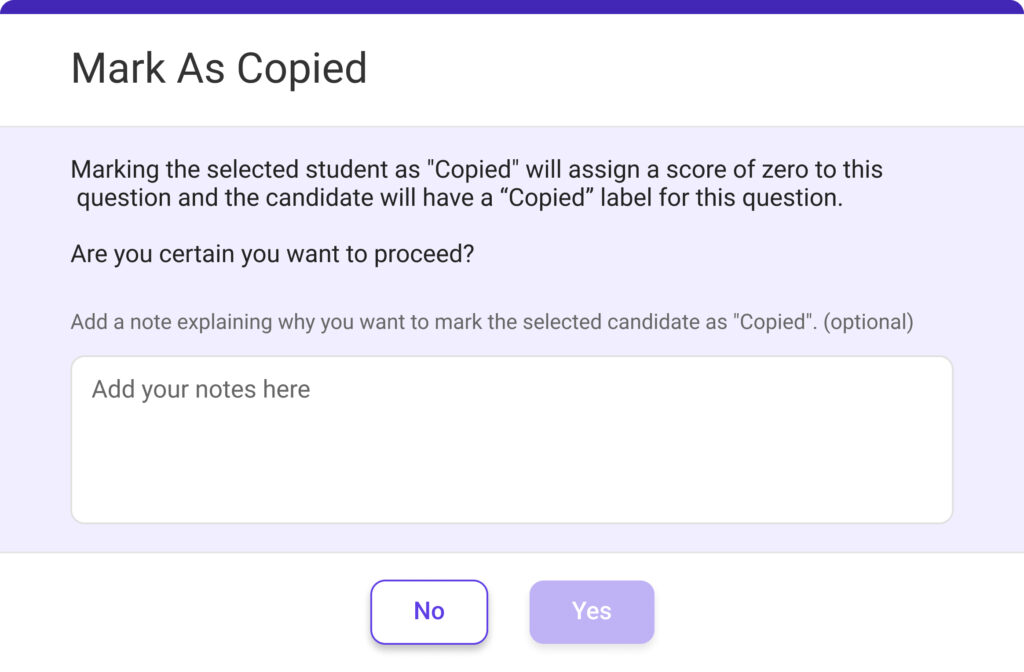

- Confirmation prompts for significant actions

- Clear warning systems

- Easy undo/redo capabilities

Conclusion

This project provided valuable insights into:

- The importance of balancing automation with human judgment.

- How thoughtful UX and UI design can significantly impact evaluation quality.

- The value of continuous user feedback in refining complex workflows.

Personal Growth

Leading this project enhanced my understanding of:

- Prompt engineering and prompt configurations required

- Designing for specialized user groups with complex needs

- Balancing efficiency with accuracy in assessment tools

- Creating intuitive interfaces for data-heavy applications

- Managing the intersection of AI and human decision-making

This project demonstrates how thoughtful UX design can transform a challenging task into a streamlined, efficient process while maintaining high standards of evaluation quality.